Are we succeeding when it comes to convincing kids of the value of human-generated writing? What happens when we reframe the conversation by acknowledging that writing is technology?

Techne, Episteme, & Plato:

As a former English teacher, this post feels a little bit like a betrayal. Like I've turned my back on my people and the noble struggle they continue to endure: namely, the art and science of teaching students how to write. Arguably one of humanity's most sacred and unique skills as a species.

In reality this piece is not an betrayal; it's an attempt to reframe the conversation in the current context of AI and Large Language Models (like ChatGPT), and to do so by revisiting one of writing's first critics: the Greek philosopher, Plato, who understood the practice on different terms than we do today.

For him the act of writing was still a novel creation, manufactured by humans and therefore something that probably felt a bit new... and unnatural. For us, however, writing has always been a part of our lived experience, a human capacity that has always already been part of our human form of life.

Like some of our students (but for different reasons, perhaps), Plato, by way of his teacher and literary protagonist Socrates, makes his ambivalence about writing well known, especially when comparing it to the skill of oratory, aided by a highly-functional, working memory.

We know this because Plato wrote down his criticisms in the Phaedrus, one of his many written recordings of Socrates' famous dialogues. He records Socrates' words as follows:

For Plato, writing was a form of technology, and one that threatened basic, human cognitive capacities like our ability to think, remember, and know the true nature of things. He offered other arguments in support of this as well (that I'll address in a later post), but the point I want to explore here are the parallels between Plato's worries about the impact of technology and our current conversation about the impact of AI.

Our history with technology over the millennia is a story of using our understanding or knowledge (what the Greek's called episteme) to identify, define, extract, and transfer the powers, capacities, skills, and artful techniques of man and nature (what Greeks called techne) in order to optimize and multiply those capacities in machinic devices and mechanisms manufactured by humans. In other words, unlike any other species, we use our knowledge (episteme) to build technologies (techne) in order to offload capacities that otherwise are limited by the abilities of our human body. And in this case, writing helps us offload the demand to commit things to memory. It expands our ability to keep a record of things, but reduces what sticks in our minds, therefore limiting our knowledge according to Plato.

This history and entanglement with technology applies to the modern workplace as well, namely a “history of people outsourcing their labor to machines - beginning with rote, physical work (like weaving) and now involving some complex cognitive work” (de Cramer and Kasparov, p.97). Think of how we describe automobiles in terms of horsepower because the machine replicated and increased the speed and strength of one of nature’s most impressive specimens. The power demonstrated by the machine in no way threatened humanity’s place in nature; if anything it confirmed our ingenuity. But AI is different, just as writing was for Plato, because these technologies attempt to migrate powers of the human mind to machines with technical and computational capacity that far exceeds ours.

Technology, from this perspective, threatens not just our technical capabilities as a human species, but our seat of supremacy when it comes to knowledge and cognition as well. In other words, episteme is losing currency in the post-knowledge economy of the information age, and if machines are performing tasks, calculations, and scores of music equally as well (and even better) than humans, and what you know no longer ensures you a valued role in our ever-changing society, how should we face this predicament from a people-centered perspective? Was Plato on to something?

Stone Tools, Chainsaws, and Why We Still Write:

Enter the year, 2022. It's November, and for the first time for many of us, we witnessed the power of Large Language Models like ChatGPT, powered by deeply layered, complex neural networks, whose workings remain a mystery to us as human observers. Like Plato, many of us felt the discomfort of the uncanny, the sense something unnatural was unfolding before us.

And with the start of a new school year, faculty are facing an unprecedented challenge in the era of Large Language Models: How do we convince students that the juice is worth the squeeze when it comes to engaging in the arduous (and at times slow) process of writing? A provocation I propose is, What if calling writing a competency complicates rather than clarifies our cause? With this in mind, what could we learn from Plato?

I had the opportunity to hear Eric Hudson speak at Woodward Academy last Tuesday here in Atlanta. His talk, "The Power and Potential of Generative AI in Schools," was fantastic, and at one point, he showcased this quote from Ethan Mollick's piece, "The Homework Apocalypse," on one of his slides:

In the age of instant generative AI, students need to know the why when we ask them to engage in difficult processes like writing. Taking seriously Plato's reminder that writing is a form of technology, the fact that students are having a hard time understanding the why becomes all the more clear: it's like asking them to use stone tools in a workshop full of freely available, state-of-the-art power towels. I'm not using this metaphor to denigrate writing, but to understand more deeply the perspective of students who "want to accomplish more than they did before."

We need to reframe the messaging because of course human-generated writing is an invaluable technical capacity that every learner needs to master, but what if we stopped calling it a competency and saw it instead as an appropriate technological medium for demonstrating certain human competencies? For instance, critical thinking is a competency; so is communicating effectively or even storytelling, perhaps. I think of self-knowledge and self-awareness as a competency too, and writing is one of the most beautiful and sacred ways to make these competencies visible, one where human artistry never ceases to surprise and inspire us. In this sense, I'm not convinced that Plato's prediction about forgetfulness proves to be that important or concerning.

Competencies and Technology in a Purposeful Context:

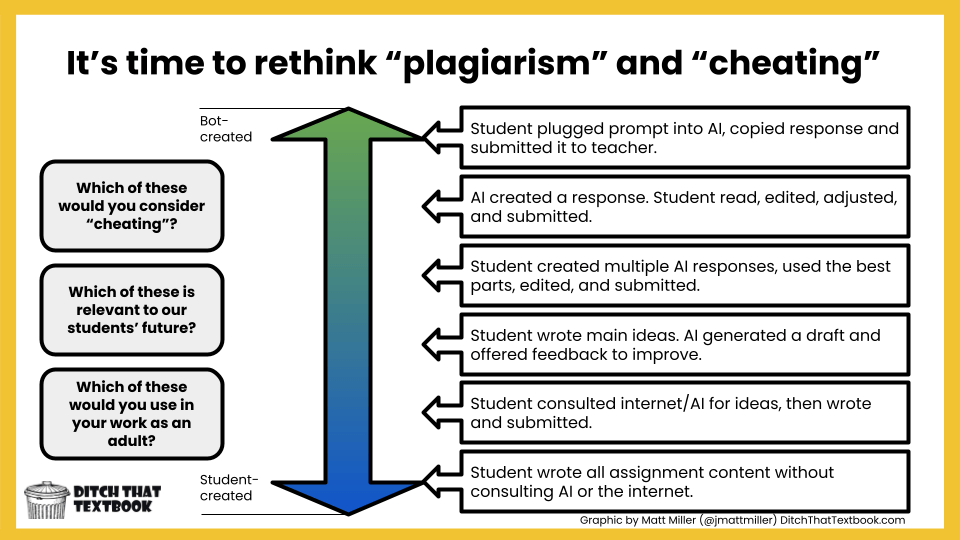

On that Tuesday at Woodward, I really started thinking about this idea, thanks to what Eric shared. After his session, Sarah Hanawald spoke as well and shared a graphic to help us frame a better conversation around when is it appropriate to seek assistance or perhaps even automate parts of the writing process using AI, versus when is it necessary to limit the task to human efforts alone. To spark the conversation, she handed out the following graphic from Ditch That Textbook:

When we start thinking about the why behind each writing occasion or assignment by identifying the competency at play, the two dimensional graphic starts to look a little more three dimensional. For instance, if it's about communicating information efficiently, there may be a lot of appropriate use cases for automating parts of the process. If it's about engaging in critical, reflective thinking, perhaps relying less on AI is necessary, at least at first.

If we want to succeed in getting kids to see writing less as a stone tool and more as an invaluable technique for expressing one's competencies and cognitive capacities, then we have to do more than say that writing is important in and of itself (even if we know that to be true due to our greater lived experience). Because if it's just writing for the sake of writing, they will work smart when they might need to be working hard, and that's a problematic outcome that can best be summed up by finishing Plato's written statement cited at the beginning of this article:

AI will tell our students lots of stuff (whether what it tells us is true is for my next post), but it will not teach them like a human mentor can and should. This means students need to write because it's also an opportunity for us as teachers to connect with them in those invaluable teachable moments. It's a way to cultivate wisdom and build their cognitive capacity. We just need to be more explicit about why we're practicing this timeless technique as well as how it connects to their lived experience. But keep in mind, AI is forever part of their lived experience moving forward, so we must coach them on how to use AI responsibly and in ways that augment instead of substitute for their performance as writers.

Sources:

- Plato. Phaedrus from The Collected Dialogues of Plato. Trans. R. Hackworth (1952). Eds. Edith Hamilton and Huntington Cairns. Princeton University Press, 2009.

- F. I. G. Rawlins. “Episteme and Techne.” Philosophy and Phenomenological Research, Vol. 10, No. 3 (Mar. 1950), pp. 389-397.

- David de Cremer and Garry Kasparov. “AI Should Augment Human Intelligence, Not Replace It.” Harvard Business Review. Harvard Business Review Press, Winter 2021.

Comments